Examples of these formats areĪ typical audio processing process involves the extraction of acoustics features relevant to the task at hand, followed by decision-making schemes that involve detection, classification, and knowledge fusion.

There are devices built that help you catch these sounds and represent it in a computer-readable format. A high sampling frequency results in less information loss but higher computational expense, and low sampling frequencies have higher information loss but are fast and cheap to compute. The sampling frequency or rate is the number of samples taken over some fixed amount of time.

#Python sounddevice records an empty wav file series#

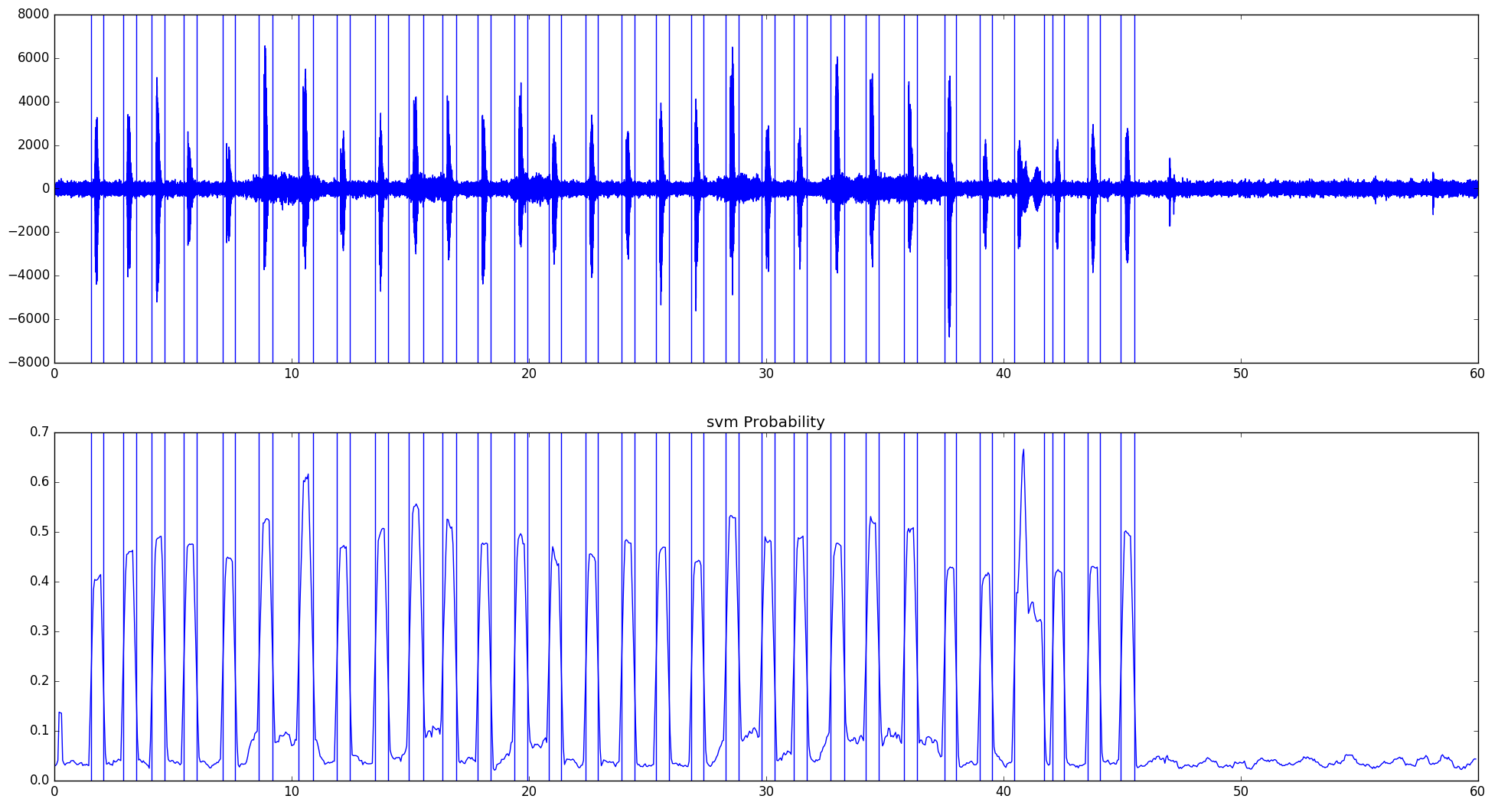

In signal processing, sampling is the reduction of a continuous signal into a series of discrete values. Sound waves are digitized by sampling them at discrete intervals known as the sampling rate (typically 44.1kHz for CD-quality audio meaning samples are taken 44,100 times per second).Įach sample is the amplitude of the wave at a particular time interval, where the bit depth determines how detailed the sample will be also known as the dynamic range of the signal (typically 16bit which means a sample can range from 65,536 amplitude values). The sound excerpts are digital audio files in. Genre classification using Artificial Neural Networks(ANN).In the second part, we will accomplish the same by creating the Convolutional Neural Network and will compare their accuracy. We will also build an Artificial Neural Network(ANN) for the music genre classification. In the first part of this article series, we will talk about all you need to know before getting started with the audio data analysis and extract necessary features from a sound/audio file. Applications include customer satisfaction analysis from customer support calls, media content analysis and retrieval, medical diagnostic aids and patient monitoring, assistive technologies for people with hearing impairments, and audio analysis for public safety. Some of the most popular and widespread machine learning systems, virtual assistants Alexa, Siri, and Google Home, are largely products built atop models that can extract information from audio signals.Īudio data analysis is about analyzing and understanding audio signals captured by digital devices, with numerous applications in the enterprise, healthcare, productivity, and smart cities. While much of the literature and buzz on deep learning concerns computer vision and natural language processing(NLP), audio analysis - a field that includes automatic speech recognition(ASR), digital signal processing, and music classification, tagging, and generation - is a growing subdomain of deep learning applications.

0 kommentar(er)

0 kommentar(er)